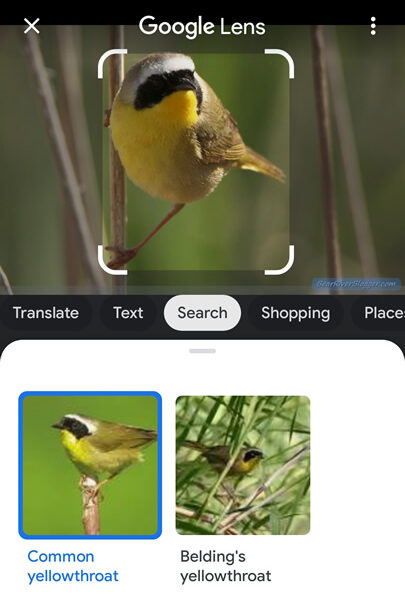

Google Lens, launched in 2017, has rapidly evolved into one of the most powerful AI-driven tools for visual search. Initially designed to identify objects, landmarks, plants, and animals using images, it has now moved beyond static image searches with its latest 2024 update. The inclusion of video search capabilities significantly enhances the user experience, opening new avenues in the way we search, learn, and interact with the world through technology. This article explores the new video update, the technology behind it, its benefits, usage scenarios, and a concluding reflection on its potential.

The New Video Update

In 2024, Google Lens introduced an innovative feature that enables video search in addition to its existing image-based functionality. This update is powered by Google’s Gemini AI model, a sophisticated machine learning algorithm capable of analyzing video content to answer user queries. Users can now record videos, ask questions about what they see, and receive relevant information based on the content within the video. For instance, while recording a video of a flower in a garden, the user can ask what type of flower it is, and the AI will process the video, recognize the object, and provide a detailed response. Similarly, for a video of animals, Google Lens can explain behaviors or offer information about the species captured.

The Technology Behind Google Lens

At the core of Google Lens is computer vision, a field of artificial intelligence (AI) that trains computers to interpret and make decisions based on visual data. The Gemini AI model plays a central role in processing the video input, analyzing each frame to identify objects, patterns, and actions. The AI model uses deep learning techniques to understand the content and match it with a database of knowledge, generating responses that are accurate and contextually relevant. With the 2024 video search update, Google has taken the idea of visual search beyond static images. The AI is not only capable of recognizing still objects but can now interpret actions, movements, and context in dynamic video. This advancement allows the AI to select the most relevant frames and provide information tailored to the user’s question, making visual search more immersive and interactive.

Google Lens Video Search’s Benefits

- Richer Contextual Information: With video input, Google Lens can now deliver more contextual answers. Instead of relying on a single image, the AI can analyze a series of frames, giving it more data to work with. This leads to more accurate and detailed responses, particularly useful for complex subjects, such as animal behavior or plant identification.

- Enhanced Learning Experience: Google Lens’s video search allows users to learn as they explore. For example, while recording a video of a bird, users can ask questions about its species or behavior. The AI can respond in real-time, making learning more dynamic and engaging.

- Simplified User Interaction: The new update reduces the need for users to type their queries. Instead, they can simply ask questions aloud while recording, and Google Lens will provide immediate answers. This hands-free, voice-assisted feature makes the interaction more natural and efficient, especially in environments where typing isn’t practical

- E-commerce Capabilities: Google Lens’s product recognition feature, which allows users to learn about an item’s price, reviews, and availability, has been expanded with video input. Users can now record a video of a product and get detailed insights, making shopping more convenient.

Usage

- Educational Settings: In classrooms or on educational tours, students can use Google Lens to record videos of animals, plants, or historical landmarks and ask questions about them. The AI-powered responses provide a new level of engagement and interactivity in learning environments

- Travel and Exploration: Travelers can record videos of landmarks, artifacts, or natural scenes and ask Google Lens for information. This enhances travel experiences, as users can immediately learn more about the places they visit without needing a guidebook or searching manually.

- Shopping: Video search can revolutionize online and in-store shopping. By recording a video of a product, users can instantly find pricing, reviews, and deals, making purchasing decisions easier and faster.

- Nature Observation: Nature enthusiasts can use video search to identify plants, animals, or ecological phenomena while hiking or exploring. Google Lens provides real-time, detailed information based on the surroundings captured in the video.

Conclusion

The 2024 Google Lens video update marks a major advancement in visual search technology, transforming how we interact with the world. Powered by the Gemini AI model, it extends the capabilities of Google Lens from static images to dynamic videos, allowing for richer, more informative interactions. The ability to capture video, ask questions, and receive immediate, detailed responses elevates learning, shopping, travel, and everyday exploration. As AI continues to evolve, tools like Google Lens will likely become even more integrated into our daily lives, simplifying the way we search for and consume information. The shift from text-based to visual and video-based search is a natural progression that enhances accessibility and convenience, putting powerful AI tools in the hands of every smartphone user. In conclusion, Google Lens’s new video search is not just a feature update but a significant leap forward in how we use technology to understand the world around us. It has the potential to reshape education, exploration, and commerce, making information more accessible and engaging than ever before. The future of search is undoubtedly visual, and Google Lens is at the forefront of this revolution.

Hi i think that i saw you visited my web site thus i came to Return the favore Im attempting to find things to enhance my siteI suppose its ok to use a few of your ideas