In the age of artificial intelligence (AI), two powerful technologies—voice cloning and deepfake—have gained significant attention. These advancements have revolutionized industries from entertainment to customer service, while simultaneously sparking serious ethical concerns. Voice cloning, the process of replicating a person’s voice, and deepfake, the creation of highly realistic synthetic media (usually videos and audio), are both cutting-edge technologies that hold vast potential but also pose risks. This article explores the origins, underlying technology, key players, applications, benefits, disadvantages, future prospects, and the ethical landscape surrounding voice cloning and deepfakes.

Introduction

Voice cloning enables the creation of a digital version of a person’s voice that can generate speech indistinguishable from the original. This is achieved using AI models trained on audio samples. Deepfake technology takes it a step further, using AI to create highly realistic videos or images of people doing or saying things they never actually did, often through the manipulation of facial movements and voice synchronization. These technologies have surged in popularity, with applications in everything from entertainment to potential security threats, raising both excitement and alarm in equal measure.

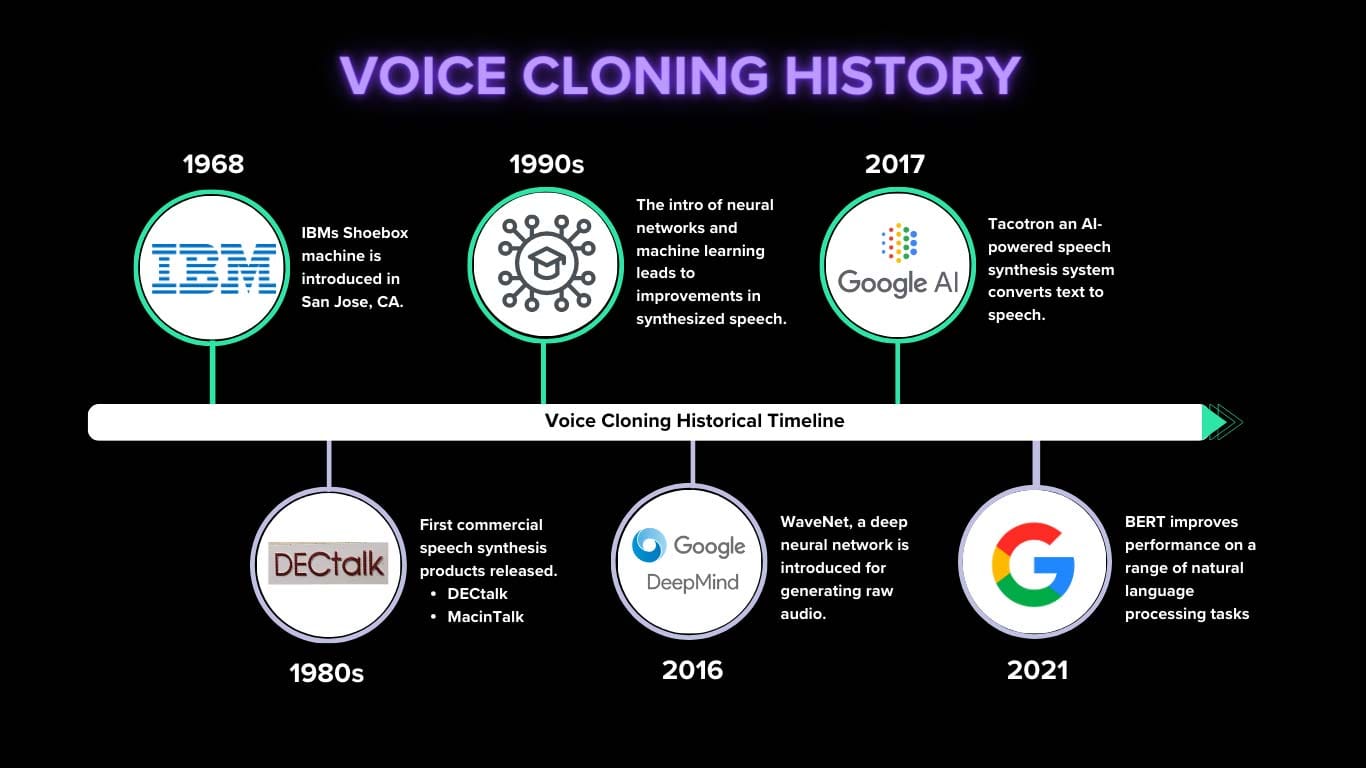

Early Developments

in Voice Cloning and Deepfakes

The origins of voice cloning trace back to text-to-speech systems, which were initially robotic but improved with the use of deep learning and natural language processing. In contrast, deepfake technology gained traction with the rise of Generative Adversarial Networks (GANs) in the early 2010s. GANs were instrumental in creating hyper-realistic media, allowing for face-swapping, video manipulation, and audio synchronization that were previously unimaginable.

Technology Behind

Voice Cloning and Deepfakes

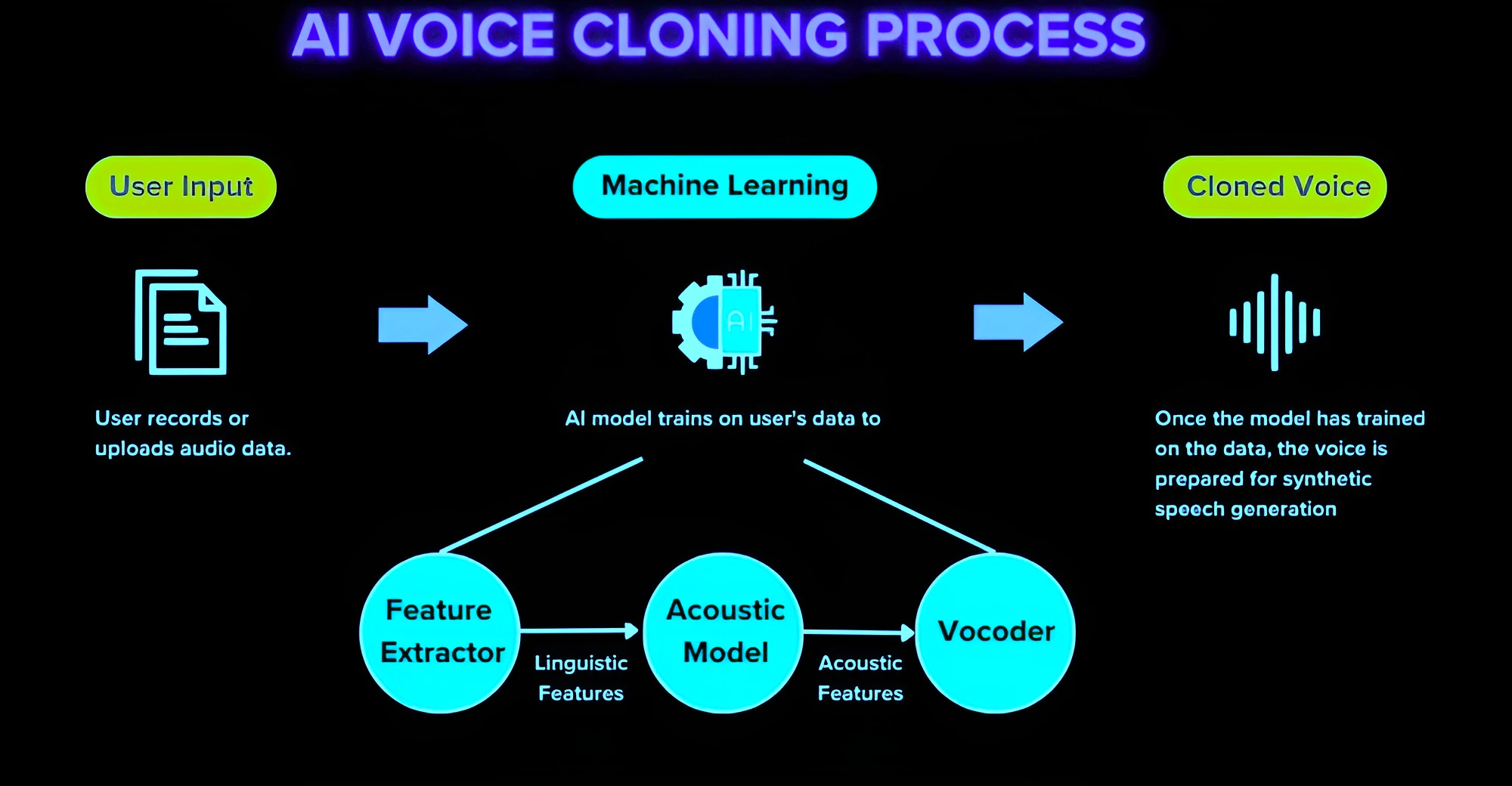

-Voice Cloning Technology

Voice cloning is primarily driven by AI algorithms, particularly deep learning models like WaveNet (developed by DeepMind) and Tacotron 2 (developed by Google). These models rely on neural networks to analyze the intricate features of a person’s voice—such as pitch, tone, cadence, and inflections—using relatively small audio samples. Once trained, these models can generate speech that mimics the original speaker’s voice in real-time, capable of producing high-quality, nuanced speech with minimal input data.

- Data Collection:

The first step in voice cloning is gathering recordings of the target speaker. Even a few minutes of audio can be sufficient for modern systems to produce a convincing clone. - Neural Network Training:

The collected data is then processed through neural networks that learn to recognize patterns in voice data, including phonemes, syllables, and word formation.

- Text-to-Speech Conversion:

Once trained, the model can convert text inputs into speech in the cloned voice, replicating the speaker’s unique voice characteristics.

-Deepfake Technology

Deepfakes, particularly video deepfakes, rely on GANs. In a GAN, two neural networks work in tandem—a generator and a discriminator. The generator creates fake content (in this case, faces or voices), while the discriminator evaluates the authenticity of the content. Over time, the generator improves its output to a point where the deepfake becomes indistinguishable from the real content.

- Face and Voice Mapping:

To create a convincing deepfake, an AI model maps the facial movements, expressions, and voice patterns of the subject.

- Synchronization:

The challenge lies in syncing the cloned voice with the facial expressions and lip movements of the deepfake video, a task AI models can now perform with startling accuracy.

- Refinement:

Over repeated cycles, the generated deepfake becomes more refined, eventually producing high-quality, realistic media.

Companies Working on

Voice Cloning& Deepfake Technology

Several companies are at the forefront of developing voice cloning and deepfake technology. Some focus on legitimate, beneficial applications, while others are working on detection tools to combat misuse.

- Descript:

Known for its advanced voice cloning tools, Descript allows users to create digital replicas of their voices, which are often used in content creation and podcasts. - Resemble AI:

Offers real-time voice generation for various industries, including gaming, film, and customer support. It also provides extensive customization features for its synthetic voices. - Lyrebird:

Acquired by Descript, Lyrebird pioneered low-data voice cloning, making it possible to replicate voices with minimal training data.

- DeepMind (Google):

DeepMind’s WaveNet significantly advanced the field of voice synthesis, making machine-generated speech sound more natural and human-like.

- Sensity (formerly Deeptrace):

A company that works to detect and counter deepfake videos, Sensity is focused on cybersecurity and combating the malicious use of deepfake technology.

Usages of

Voice Cloning and Deepfakes

Voice cloning and deepfake technology have been adopted across a wide range of industries:

- Entertainment:

Actors’ voices are cloned for film dubbing or re-creating performances, while deepfakes are used in special effects. - Customer Service:

AI-generated voices are used to enhance virtual assistants and automated customer support systems. - Accessibility:

Individuals who have lost their ability to speak due to illness or injury can use voice clones that replicate their original voice. - Marketing:

Personalized audio ads featuring cloned voices of celebrities or influencers. - Gaming:

Realistic voice replication in video games for creating immersive environments. - Political Satire/Parody:

Deepfakes have been used to create comedic content or satirical takes on public figures. - Cybersecurity Threats:

Unfortunately, malicious actors have used deepfakes and voice cloning for scams, impersonation, and spreading misinformation.

Benifits of

Voice Cloning and Deepfakes

- Efficiency:

Automated systems using voice cloning can significantly reduce the need for human voiceover work or live customer service agents. - Personalization:

AI-generated voices allow businesses to offer more personalized interactions, increasing user engagement. - Accessibility:

Voice cloning provides a lifeline to people with speech disabilities, allowing them to communicate using their own digital voices. - Cost Reduction:

In entertainment and gaming, deepfake and voice cloning technologies reduce the need for reshoots or complex post-production processes. - Creativity:

Artists and filmmakers can use deepfake technology to tell stories in entirely new ways, opening up possibilities for creativity.

Disadvantages and Ethical Concerns

- Misinformation and Fraud:

The biggest concern with deepfakes is their potential to create misinformation. Political deepfakes have been used to spread false information, and voice cloning can be exploited in identity theft or financial scams. - Privacy Invasion:

Both technologies risk violating personal privacy by using someone’s likeness or voice without their consent. - Trust Erosion:

As deepfake technology improves, it becomes harder for people to trust the authenticity of digital media, raising skepticism around all forms of video and audio content. - Cybersecurity Risks:

Voice cloning and deepfakes pose threats to authentication systems that rely on voice recognition, potentially leading to fraud and unauthorized access.

Future Aspects

As AI continues to evolve, the future of voice cloning and deepfakes looks both promising and fraught with challenges. On the positive side, voice cloning may see widespread adoption in customer service, entertainment, and healthcare, with enhanced personalization and efficiency. Deepfakes will continue to push boundaries in digital content creation, with potential applications in education, virtual reality, and augmented reality.

However, stricter regulation will likely emerge to counter the dangers of deepfake misuse, with tech companies and governments collaborating to develop AI detection systems to flag and prevent the spread of harmful deepfake content. The ethical and legal implications will require ongoing debate, as balancing innovation with privacy and security becomes increasingly vital.

Conclusion

Voice cloning and deepfake technology have undoubtedly transformed digital media, offering new possibilities in personalized services, entertainment, and accessibility. Yet, the darker side of these technologies—their capacity for deception, fraud, and misinformation—cannot be ignored. Moving forward, society must develop the tools, laws, and ethical guidelines necessary to manage the responsible use of these powerful technologies while mitigating the risks they pose.